Stage 1 of Track the Planet from DefCon 31

2023 @nyxgeek

Part 1: OneDrive Enumeration, Infrastructure Setup

See also:

Part 2: Username lists, Organization lists, Automating Scraping

Coming Soon:

Part 3: Data Analysis

DefCon Talk: Here

DefCon Slides: Here

Introduction and Background

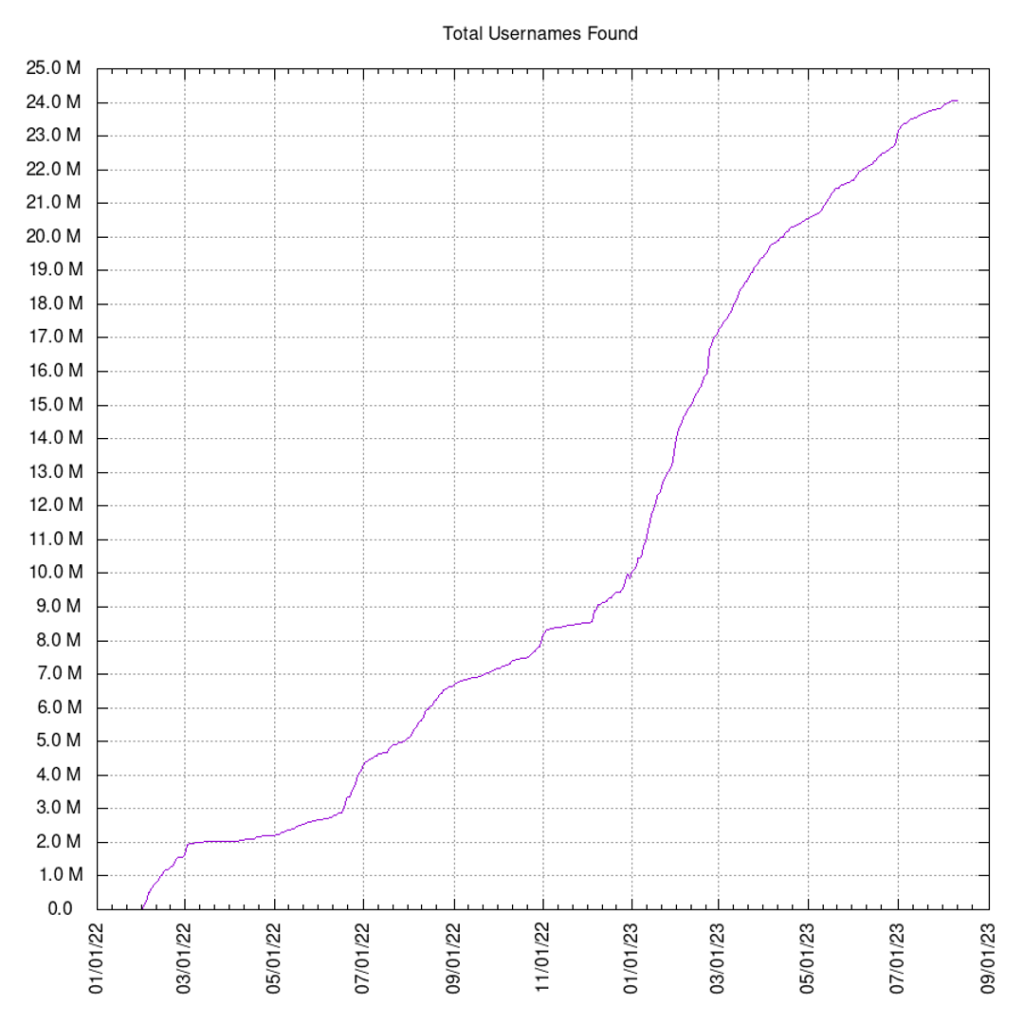

Over the course of a year and a half, I was able to enumerate 24 million users via Microsoft OneDrive. The enumeration method is a simple web request, and is silent in the sense that end-users cannot see it happening. The enumeration is so simple it could be done manually with a web browser, browsing to a page to see if it exists. The whole enumeration project was run on a small group of virtual servers, tied together with ssh, rsync, mysql, php and a python script. What I did is not complicated. Any motivated high school student could do the same.

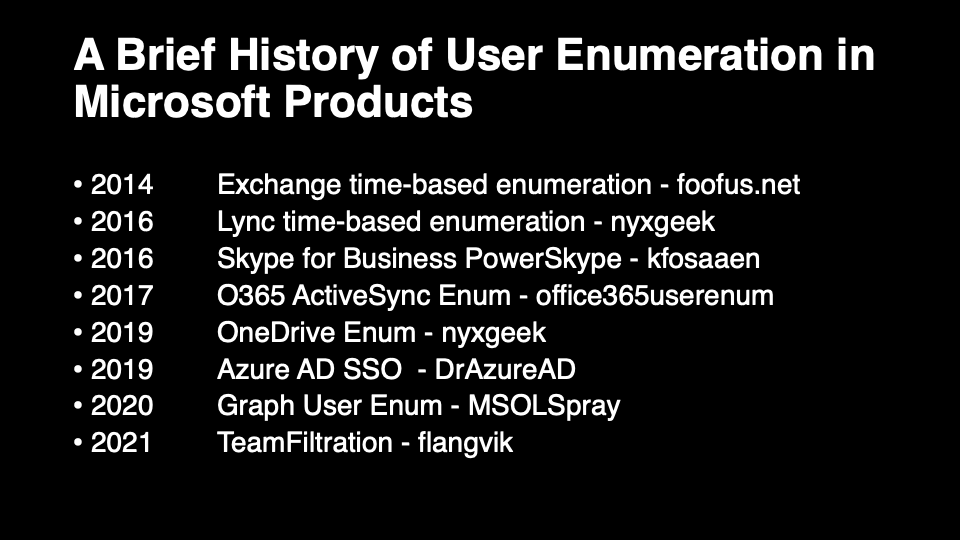

The purpose of this project was to bring attention to the fact that Microsoft does not consider user enumeration to be a vulnerability (and they haven’t for a long time).

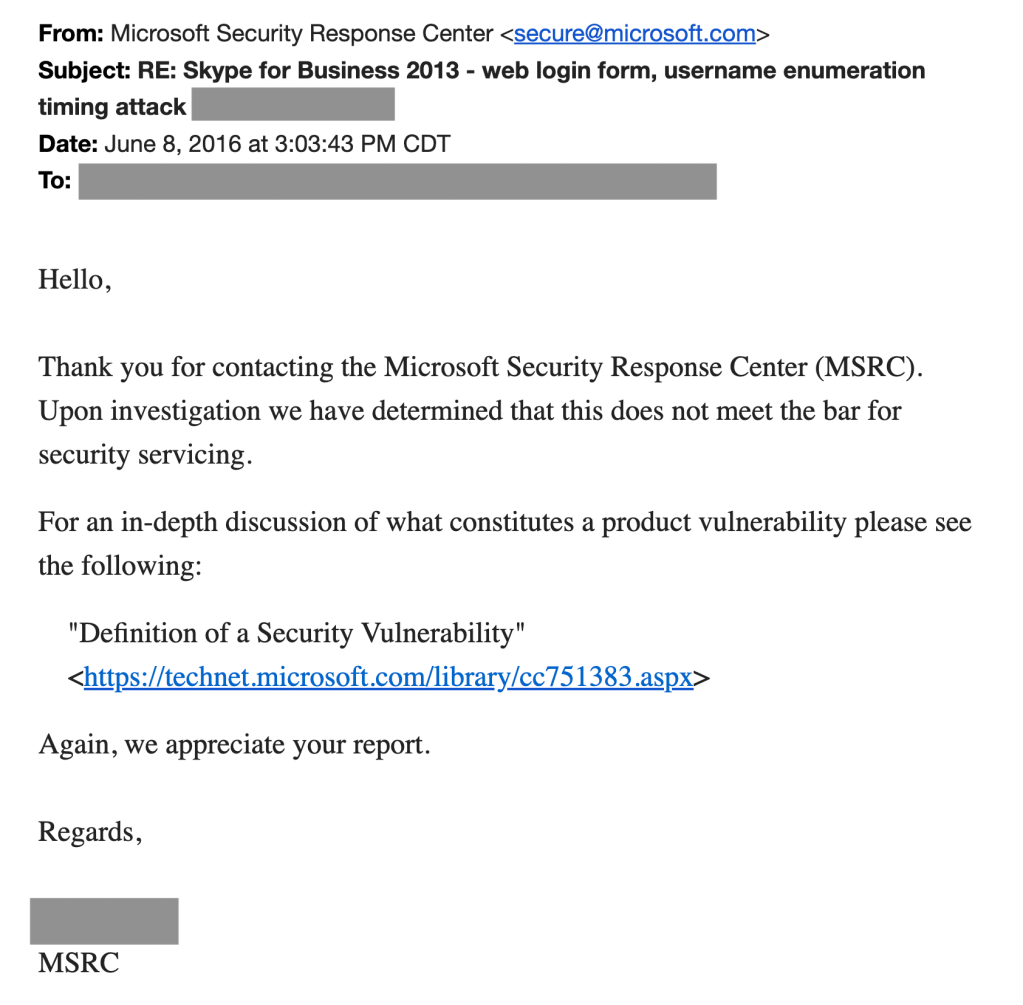

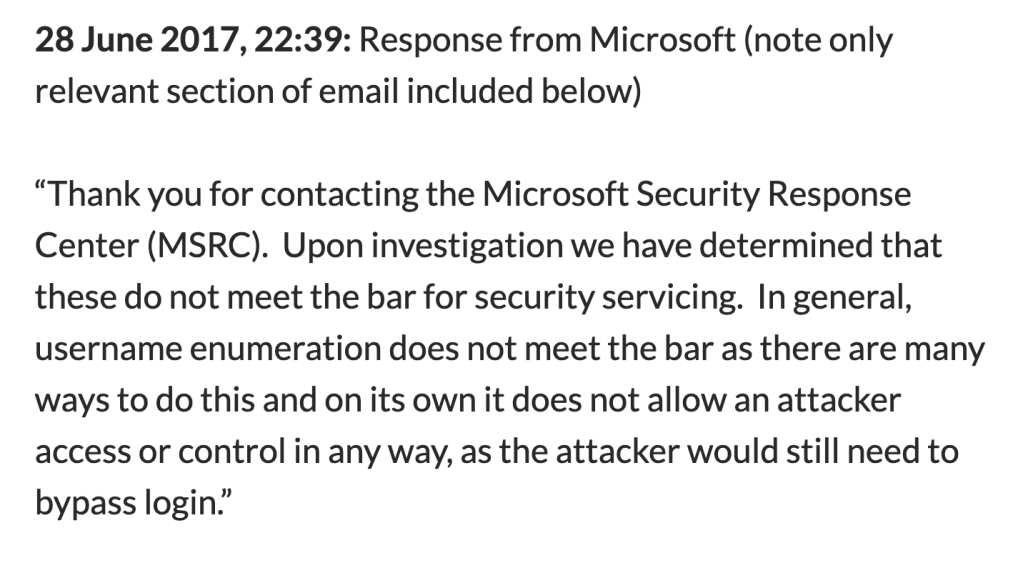

If you submit a bug report to the MSRC pointing out a user enumeration flaw, you’ll get a response back indicating that it does not meet the bar for security servicing. The exact reasons vary slightly, but revolve around a username being only one part of an authentication process, and that a password is still required.

Reasons given over the years include:

- On its own it does not consitute a vulnerability

- There are numerous other ways to do user enumeration already (lol! this leads in circles — so we won’t fix it, because it already exists? And it exists, because we won’t fix it.)

- It’s a feature, working as designed

- Usernames are similar to knowing software used, or an IP address

Now, if Microsoft truly means what they say — if user enumeration is not a security vulnerability — then nobody should mind if we make use of this feature.

OneDrive Enumeration

Basics

For details on how OneDrive enumeration works, see my blog post at TrustedSec: OneDrive to Enum Them All

In short, when OneDrive is accessed for the first time, it creates a URL on Microsoft’s servers. This url is in the format of:

[tenant]-my.sharepoint.com/personal/[username]_[domain]/_layouts/15/onedrive.aspx

An example:

https://acmecomputercompany-my.sharepoint.com/personal/lightmand_acmecomputercompany_com/_layouts/15/onedrive.aspx

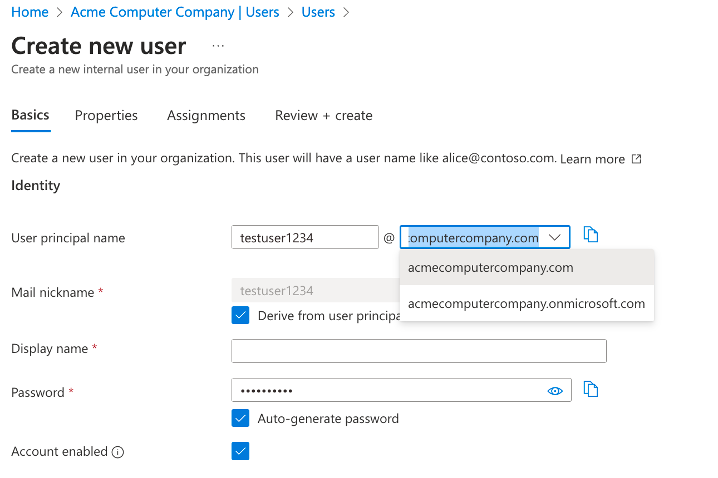

There are a few required pieces of information — tenant, domain, and username. This combination of username + domain is actually a translation of the UPN (user principal name) for the account.

In this case: lightmand@acmecomputercompany.com was the actual UPN, and was translated to ‘lightmand_acmecomputercompany_com’. You can see that symbols are simply replaced with underscores.

When an administrator creates a new user they must choose which domain to associate the account with, and this forms their UPN.

This UPN is what is being enumerated with OneDrive enumeration (and all Azure user enumeration methods for that matter).

That’s how it works!

Getting Tenant Info

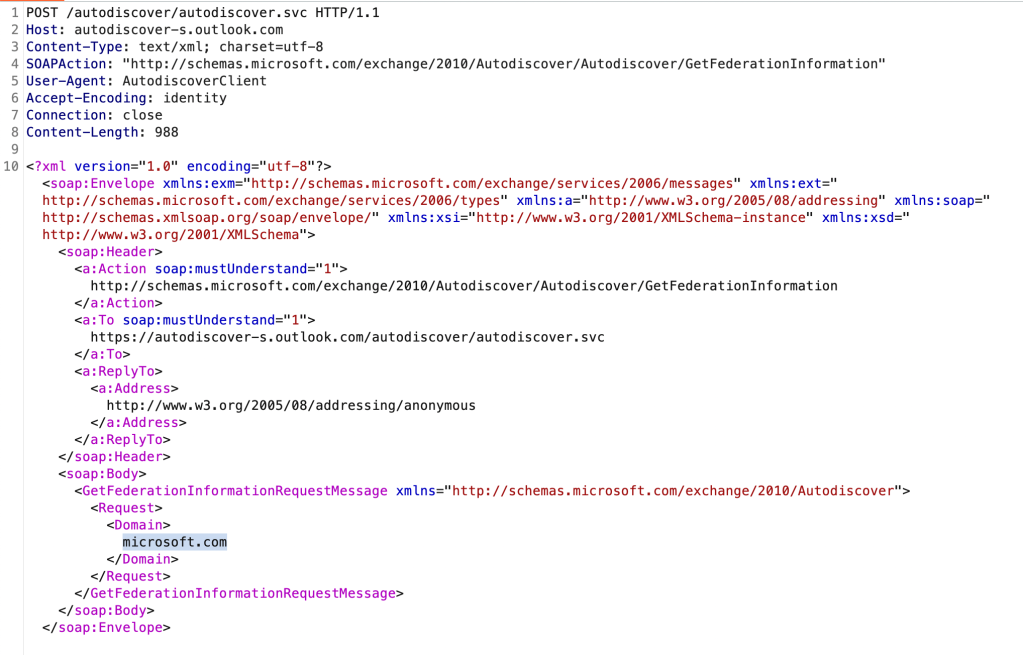

Because of this requirement for both tenant and domain name, OneDrive enumeration is a little more complicated than some other methods of user enumeration. Luckily, the tenant name can be looked up via a Microsoft endpoint. This can be done by a variety of tools, but they all use the same lookup endpoint that was identified by @DrAzureAD and implemented in AAD-Internals.

- AAD-Internals (the OG – https://github.com/Gerenios/AADInternals)

- TrevorSpray (https://github.com/blacklanternsecurity/TREVORspray)

- OneDrive_Enum (https://github.com/nyxgeek/onedrive_user_enum)

- AAD Internals OSINT Tool (https://aadinternals.com/osint/)

- CloudKicker (https://github.com/nyxgeek/cloudkicker – not yet released)

On the backend, here is the actual request:

You can see we have supplied the domain, ‘microsoft.com’.

I’ve included the text of this request here: https://github.com/nyxgeek/tron_clu_servers/blob/main/autodiscover_tenant_lookup_request.txt

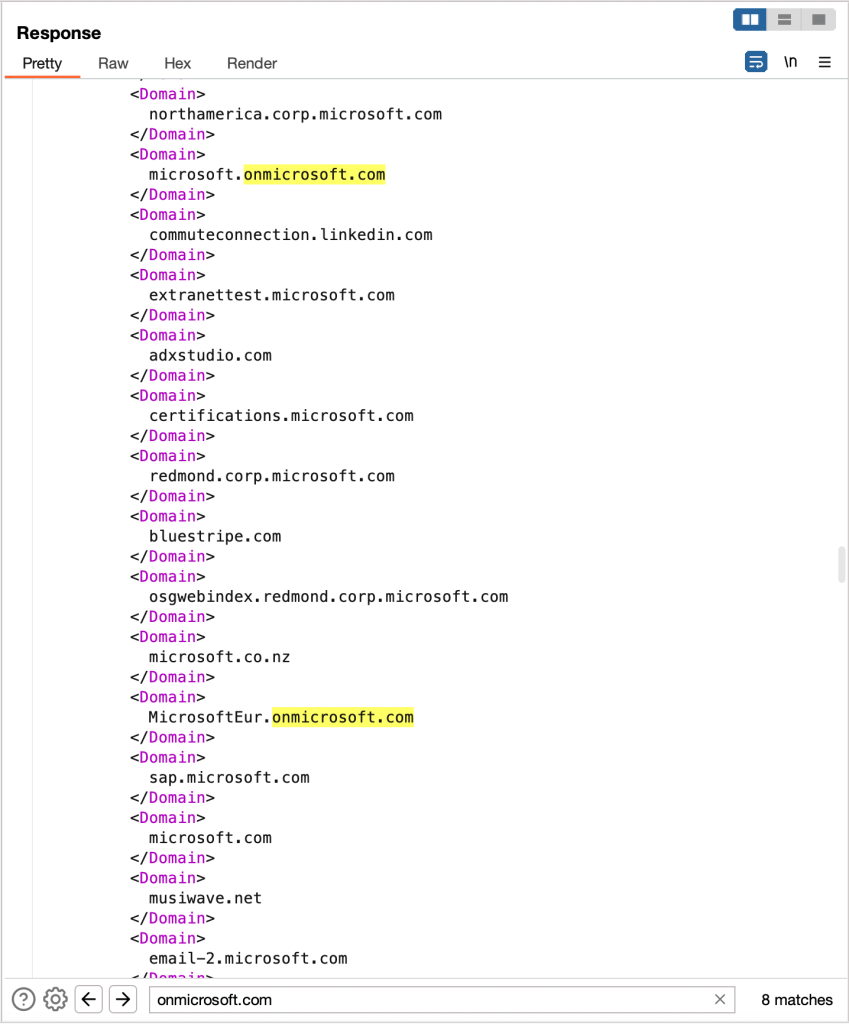

Now the results:

We get a list of the associated domains. Notice that I have searched for ‘.onmicrosoft.com’. These onmicrosoft addresses are tenant names. Above, you can see both ‘microsoft’ and ‘microsofteur’. Digging in, we can see there are actually 6 unique tenants in use:

Note: Most companies will only have one, so it won’t be a problem. However, in the case of a multi-tenant organization, you won’t know which tenant is their primary, and they may be using multiple. Users can be provisioned into any tenant, but usually are only created in one.

Getting Domain Info

So we have the tenant name. We also require the target domain. Luckily the same endpoint that gets us the tenant name(s) gets us the domain names. These are referred to as ‘custom domain names’ in Azure. They are registered to the account. Usually the UPNs will be provisioned with a primary domain. This will often be the domain that is the company’s website domain, but if that name is long, they will often go for an abbreviation.

For instance, if a company’s main site is at acmecomputercompany.org, but they also have a custom domain listed for ‘acmecc.org’, they very well might use that shorter domain as their UPN, since this becomes an email adddress in Azure. Beware, they could also choose the tenant.onmicrosoft.com domain as their UPN, so if you don’t find any users on their custom domains, check at the onmicrosoft domain.

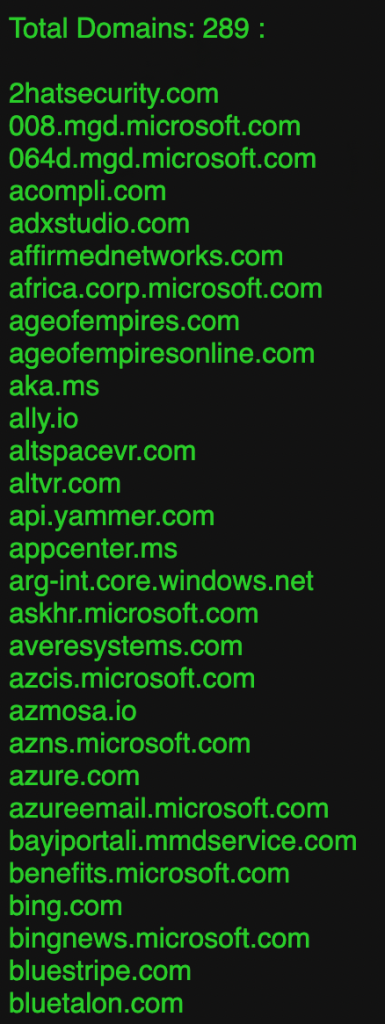

Here are the results for Microsoft from the CloudKicker tool. They have a LOT of domains registered:

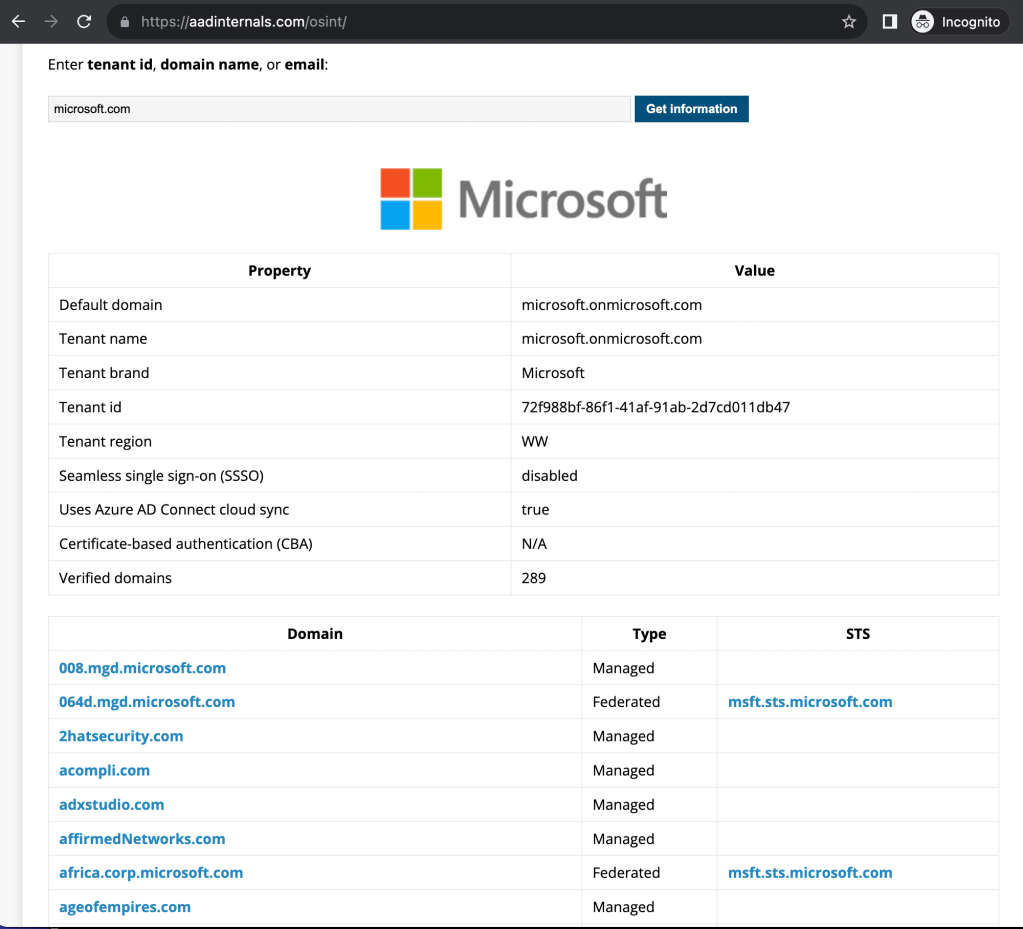

Here is the OSINT tool from aadinternals.com:

You can also search this output for ‘.onmicrosoft.com’ to find the tenant names.

At any rate, it’s easy to identify the tenant or tenants and possible domains, so long as you know where to look.

OneDrive to Rule Them All

The OneDrive enumeration method only enumerates users who have logged into M365 services (including Word, Excel, etc). When many of these services are accessed, a OneDrive URL is created to enable saving files.* (This was tested on a Windows VM and was accurate as of Dec 2022)

There are a variety of benefits to the OneDrive enumeration method:

- Simple HTTP request to identify valid users

- No logon attempt is made (no CFAA unauthorized access)

- No authentication is required (does not require account, have not agreed to any ToS) – completely anonymous

- No logs are made (at least none that are visible to organizations)

These reasons make it the perfect method for massive user enumeration.

To make use of it at scale, we will need to set up some infrastructure.

Infrastructure Setup

The overall setup is simple. A VPS provider was used with a private network. There is one server that runs the database and hosts web dashboards, which I nicknamed TRON. Then there are the bots who do the actual work, which I nicknamed CLU.

TRON – Tactical Reconnaissance and Observation Network

- LAM(P) server

- OneDrive Database

- Web interface – monitoring

- Crontab – updating graphs etc

CLU – Cloud Lookup Utility

- Bots – workers

- Performs actual scraping, sends back to TRON db

- TRON has a /home/wordlists user

- Sync this home folder from TRON to the client

- Base image for client is replicated via a snapshot in VPS

I highly recommend using a VPS-based firewall, and configuring all your hosts to use it. This simplifies things. Block everything except port 22. I made some allowances for web ports into TRON from specific hosts.

To help you set up your own servers, I’ve shared some files on GitHub. Note that these are not comprehensive, but are meant to assist. https://github.com/nyxgeek/tron_clu_servers

TRON – Server

Overview

TRON is going to be hosting Apache and MySQL. This server is going to be storing a lot of data, and will be doing lots of SQL queries. Don’t skimp here. You’re not going to want to have to upgrade down the line once all your clients are scraping.

Besides hosting the database and web server, TRON is also where the CLU hosts will pull down their userlists, domain lists, and tools from. This is done by creating a ‘wordlists’ user on the host, which will be accessed via ssh.

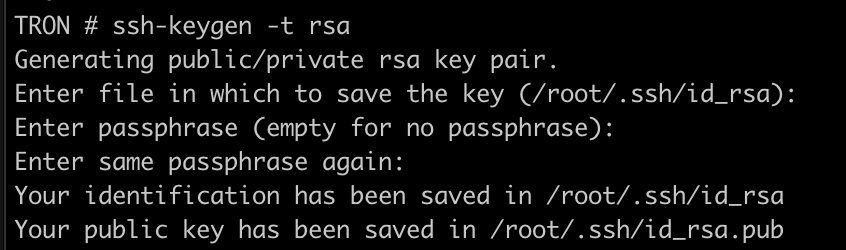

SSH

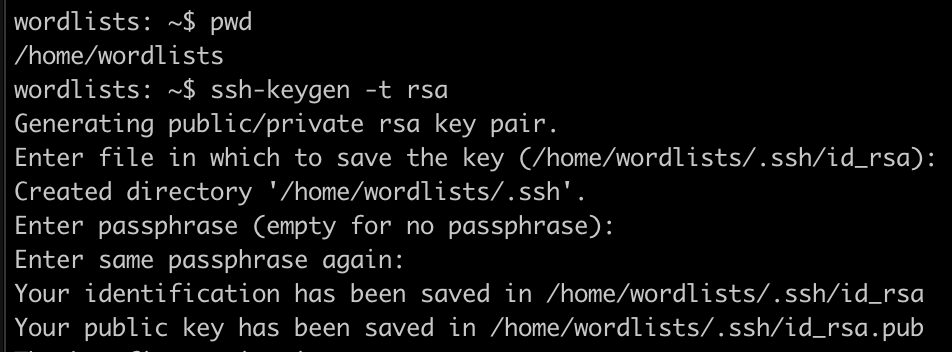

Speaking of ssh, let’s start off by generating our own ssh key for the root user if there isn’t already one. We will need this later, so that we can put it on our CLU servers. This can be done with the comand:

ssh-keygen -t rsa

If you get an error that there is already a file there, go ahead and cancel and use that existing file.

Package Installation

You will need to install the following:

apt install apache2 mysql-server gnuplot

Database

The database has a few tables in it:

- onedrive_enum

- onedrive_log

- tenant_metadata (optional)

The first two exist already in onedrive_enum (but as SQLite vs MySQL). The third, tenant_metadata, is one that holds information such as: estimated_employee_count (int), fortune500 (bool), fortune1000 (bool), etc. This is useful to perform joins for filtering on. At this time, creation of the tenant_metadata table is left as an excercise for the reader. It is not required for any of the initial scraping, but will be useful later for analysis. We will revisit this table when we do our analysis.

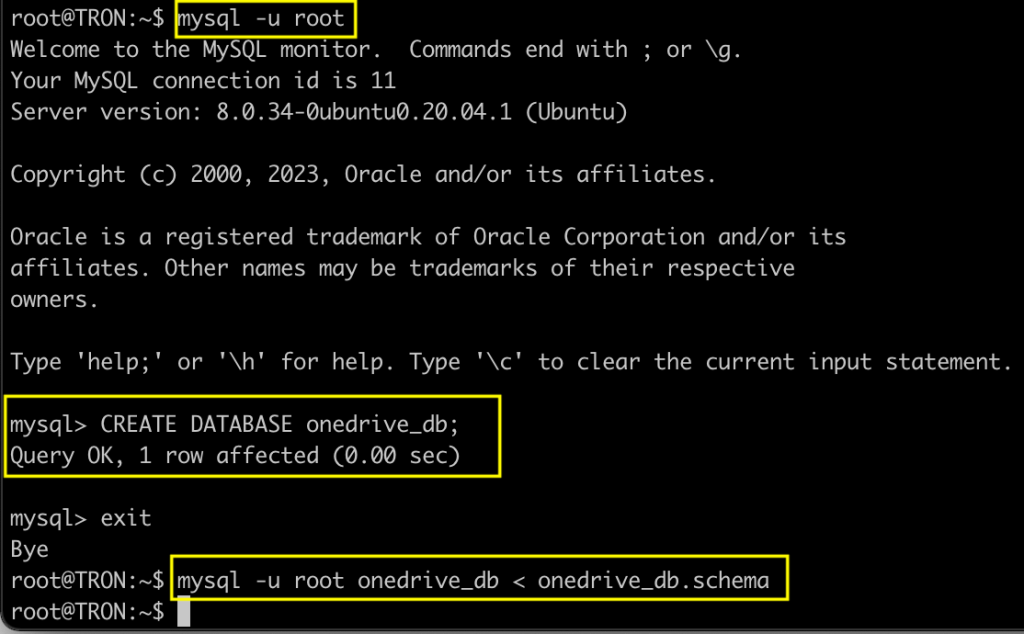

I have included a MySQL schema that can be imported here: https://github.com/nyxgeek/tron_clu_servers/blob/main/TRON/onedrive_db.schema

To import, first create a blank database called onedrive_db, then import the schema as illustrated below:

Don’t forget to create a MySQL user and grant privileges on the database to it. Here I create a user named ‘goblin’.

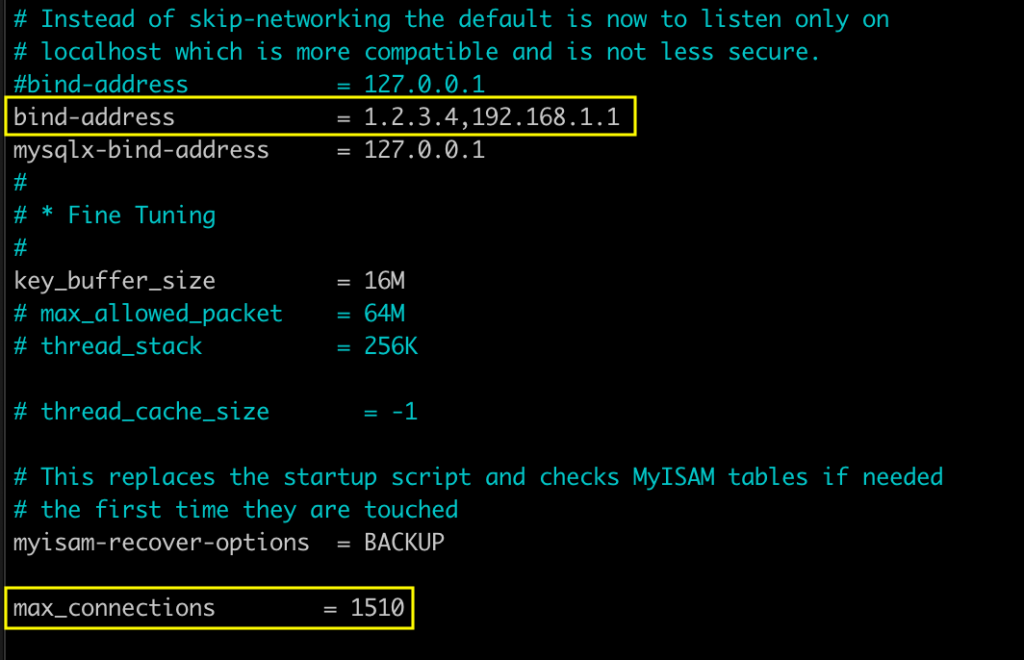

We also need to make sure mysql is reachable on the private interface (and possibly public) from the remote CLU hosts. Edit the /etc/mysql/mysql.conf.d/mysqld.cnf file, and change the bind-address to your private IP. You can specify more than one IP if you separate by commas, with no spaces.

While you’re in there, you’ll want to uncomment the line for mysql maximum connections, and set it higher. I set mine to 1510 from 151.

You may also want to increase the ulimit for file handles overall. I didn’t encounter issues until I got to about 20+ servers, but ymmv.

Additional info can be found here regarding ulimit and max open file handles:

- https://tecadmin.net/check-update-max-connections-value-mysql/

- https://www.cyberciti.biz/faq/linux-increase-the-maximum-number-of-open-files/

- https://www.percona.com/blog/open_files_limit-mystery/

- https://www.basezap.com/guide-to-raise-ulimit-open-files-and-mysql-open-files-limit/

Sync Directory

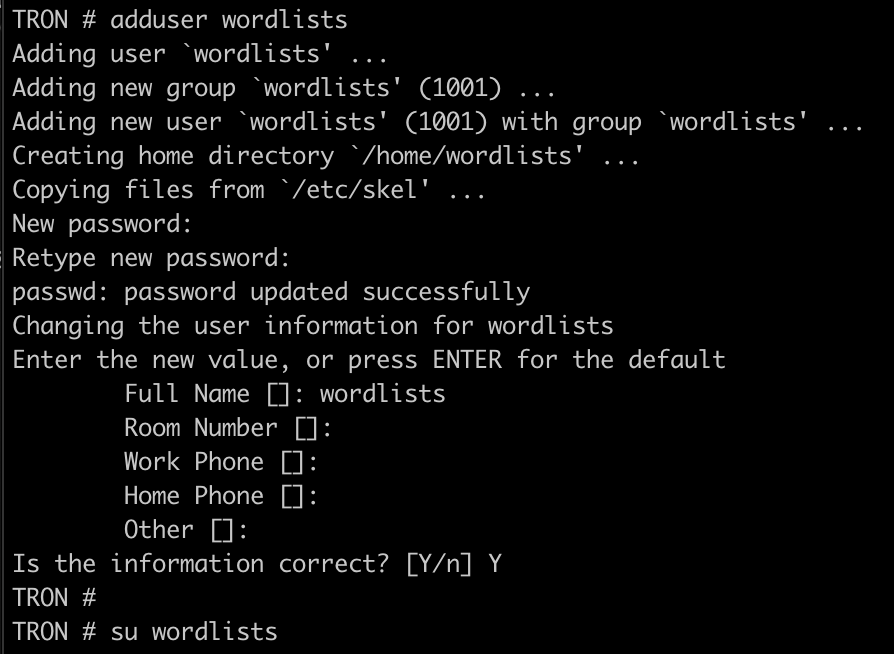

We want to be able to sync the wordlists that are on our CLU clients so that everybody has the same lists to work from. To facilitate this, we will create a dedicated ‘wordlists’ user on the TRON server which will be used by the CLU bots. We can then make changes to our sync directory on TRON, and have those changes replicated out to our CLU clients.

After creating the ‘wordlists’ user, create an ssh key for the account, using ssh-keygen. Do not configure a password on the key. We will be placing this key on the CLU bots.

This /home/wordlists/.ssh/id_rsa private key is what you’re going to want to place on your CLU servers later.

There are several files and folders

/home/wordlists/

/home/wordlists/DOMAINS/

/home/wordlists/USERNAMES/

/home/wordlists/onedrive_enum.py

/home/wordlists/sync_files.sh

- DOMAINS: contains files with lists of tenant/domain combinations, or domains to enumerate against. More on this later.

- USERNAMES: contains folders with lists in various username formats. e.g.: /home/wordlists/USERNAMES/john.smith_1kx10k/ or /home/wordlists/USERNAMES/john.smith_500x20k/

Note: I will explain this more later, but the naming convention I’ve employed — 1kx10k or 500x20k indicate the number of first names combined with last names. 1kx10k = Top 1,000 first names, top 10,000 last names. - SYNC_FILES.SH: This uses rsync and pulls down various files from TRON, including syncing the USERNAMES and DOMAINS folders.

Here is what the syncing looks like:

TRON CLU

/home/wordlists/DOMAINS > /root/DOMAINS

/home/wordlists/USERNAMES > /root/USERNAMES

Additional Files and Scripts

There are some tasks which we will want to automate. To do this, we will make some reference files and custom scripts.

- Place a

CLIENTS.txtfile under/root/on TRON, containing a list of your CLU bots. (/root/CLIENTS.txt) - All custom scripts on TRON will be placed under

/usr/games/ - All custom scripts on CLU will also be placed under

/usr/games/and will be synced from/usr/games/ - Script to run a command on all of your clients (

run_command_on_clients) from TRON - Script to show counts of users found per domain/tenant combination in the last 4 hours, 24 hours, 1 week, 1 month (

last4hours, last24hours, etc) from TRON - Script to pause/unpause all CLU operations (pause_clu, unpause_clu)

Examples of these last three items are available on the following github: https://github.com/nyxgeek/tron_clu_servers/tree/main/TRON/usr/games

Dashboards – Web Server

It’s important to be able to keep track of what your bots are doing. When you have just a few it’s no problem to manually monitor. However, once you get more hosts, it becomes too time-consuming. The solution: web dashboards to display running jobs and found count over time.

I’ve included some bash scripts that use gnuplot to create visualizations. These will be run with a cronjob which we will set up next. Put these files into place under /root/SCRIPTS/ and install gnuplot (apt install gnuplot).

https://github.com/nyxgeek/tron_clu_servers/tree/main/TRON/root/SCRIPTS

Cron Jobs

We will use cron for a variety of tasks. This includes: updating dashboard graphics, checking on tasks running on clients, backing up the mysql db. I’ve included a file with some cronjobs in it here:

https://github.com/nyxgeek/tron_clu_servers/blob/main/TRON/etc/installing_crontab.txt

CLU – Client

Overview

CLU is pretty simple. Grab wordlists from TRON. Grab scripts from TRON. Run enumeration. Log it to a db on TRON. I’m fairly certain this is going to disappoint people, but there was no awesome C&C used. Just ssh and rsync.

Creation of base images

To make deploying additional CLU hosts easy, we are going to configure one VPS host and use that as a template for our others. This will be our “golden image”.

General Configuration

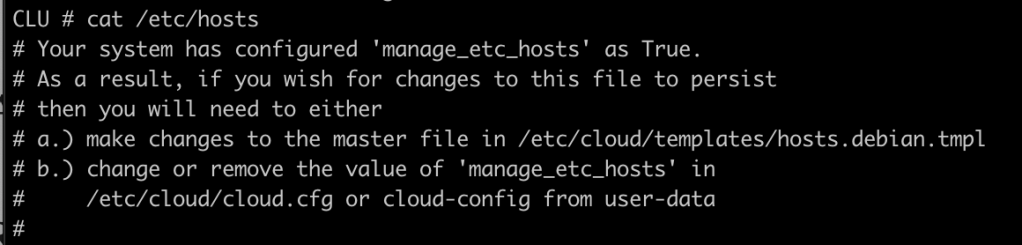

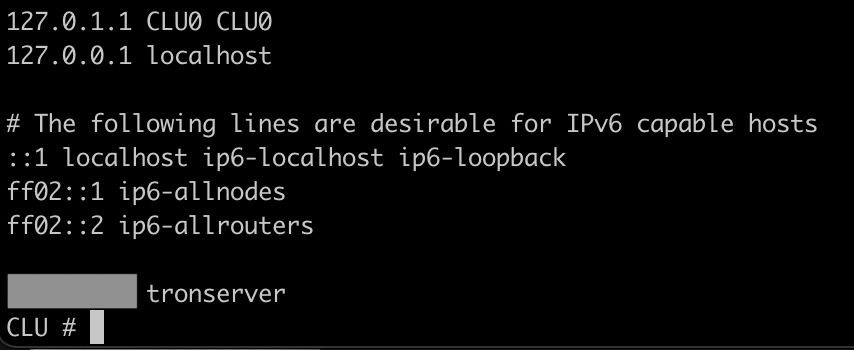

The first change to make to the base system is to add a DNS entry for your TRON server to the /etc/hosts file. Depending on your system, you may need to disable some cloud-init setting. If you see the following in your hosts file:

Go and edit /etc/cloud/cloud.cfg, and comment out the line that says:

” – update_etc_hosts”

Label the TRON server as tronserver, providing the IP address of the TRON server before it:

It is important that you use ‘tronserver’ specfically because that is how the server will be referenced in some of our support scripts.

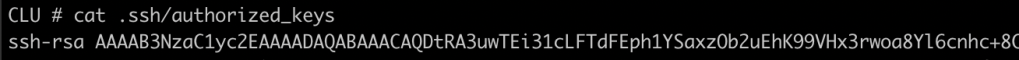

Next, we want to add our SSH public key (/root/.ssh/id_rsa.pub) from TRON to our authorized_keys file. This will allow TRON to push out changes and run commands.

CLU Files

We run CLU as root and for ease we will place our files under /root/

/root/.ssh/id_rsa

/root/sync_files.sh

/root/USERNAMES/

/root/DOMAINS/

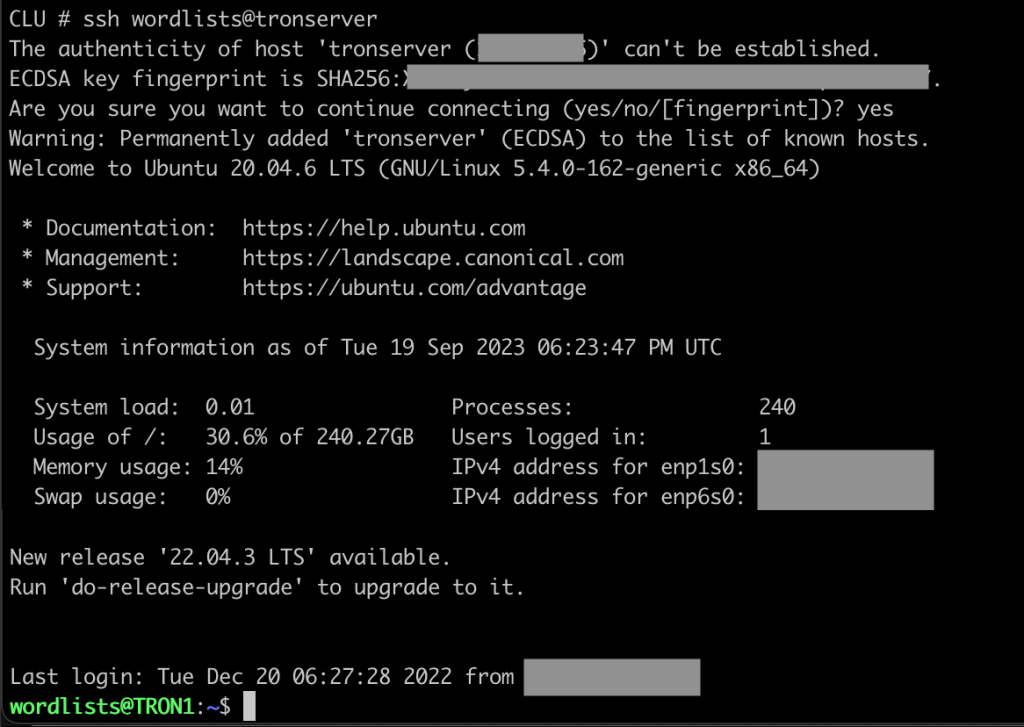

Two files are necessary: sync_files.sh and the id_rsa ssh key. Copy the id_rsa ssh key from /home/wordlists/.ssh/id_rsa on TRON to /root/.ssh/id_rsa on CLU.

The sync_files.sh script will pull down updated USERNAMES and DOMAINS folders. Be sure to chmod 700 the id_rsa file or it will complain about loose permissions. To verify that it is working, manually ssh to your TRON server using the wordlists username. This will also put the host in the known_hosts file.

If you have some base wordlists or domain lists you may want to store them in the golden image. However, in my experience, it is just as easy to pull them fresh when you deploy.

Here is our actual sync_files.sh script:

Note that it will delete any USERNAMES or DOMAINS files that are subsequently removed from either of those folders, keeping those folders in sync.

OneDrive_Enum.py Modifications

The major change we have to make is to add a MySQL connector to OneDrive. Additionally, a PAUSE feature was added. The PAUSE feature is useful if you need to work on TRON (upgrade HD size) but have a bunch of clients sending data back. A modification was made to check for the existence of a /tmp/PAUSEFILE. Using our ‘run_command_on_clients’ script to do a ‘touch /tmp/PAUSEFILE’ is an easy way to halt all scraping operations.

Adding MySQL Connector

This is a fairly straightforward swap. Now you can add -m db.conf where db.conf is a config file like:

[mysql]

host = myserver.notafakedomain.com

database = onedrive_db

user = goblin

password = s3cretp4sSw0rdCh4ng3Me

port = 3306Adding PAUSEFILE support

This is a feature to pause all operations on a CLU. Very useful if things start going FUBAR on your TRON server. Since we may have many threads open, we are going to want each thread to check for this file’s existence at /tmp/PAUSEFILE

This feature goes with the TRON commands pause_clu and unpause_clu: https://github.com/nyxgeek/tron_clu_servers/tree/main/TRON/usr/games

Both of these features and more are available in OneDrive_Enum.py 2.10:

https://github.com/nyxgeek/onedrive_user_enum

Replicating – Building Your Bot Collection

Okay great, we have our CLU golden image configured how we want it. In your VPS provider, create an snapshot or an image of this server. We will then use this image to deploy additional servers.

From your golden image, deploy a single new server. Ensure that everything is working properly. If it is not, or if you need to fix something, no problem! Make the change, make a new snapshot. Re-deploy and test. Easy.

Most VPS providers have a limit on how many VPS instances you can have. Some will have an option to request an increase, usually with some type of question as to why. BE SURE TO READ YOUR TERMS OF SERVICE BEFORE SUBMITTING ANY REQUESTS TO SUPPORT. Don’t get yourself banned from a VPS platform if you can avoid it.

Why Not <xyz> Solution?

You’ll notice that I am using OLD technologies here. ssh, rsync. They’re great tools that have stood the test of time. We can do all our C&C for our servers over SSH. It’s easy to write bash scripts that are easily modded, nothing compiled. This is the most straightforward path to doing this, requiring only a knowledge of linux and some basic sysadmin.

I had contemplated creating this in Kubernetes, which would be nice because you could automate scaling up and down on-demand. The main thing that stopped me from doing this is the source-origin of the scans coming from a single or small group of IPs. This could be fine for a small number of hosts, but if you have 40 hosts you want that traffic to be originating from a variety of sources. I realize you could probably create some round-robin type routing for outbound through some additional IPs you buy, but this additional complexity is not where I want to spend my time. There’s also a question of whether it would be worth it to move to K8s. A single VPS host is around $6 and comes with its own IP.

Maintenance and Security

Now that you have your infrastructure up, we want to make sure that it runs, and runs securely.

Perform Port Scans: Grab yet another VPS host, this time from a new provider (if you are on Linode, use Vultr, or GoDaddy, etc). You’re going to want to set up regular scans of all your hosts to make sure you didn’t mess up something in your config and expose all your hosts. Create a cron job and have it email you the results.

Backup your Data(base): MAKE BACKUPS. MAKE SURE YOUR BACKUPS ARE GOOD. You don’t want to lose all this work that you’re paying for. Actually verify that your backups are being pulled down regularly, and that the files that are pulled down contain what you think that they contain (and that they decompress successfully).

That’s all for now!

If anybody has any questions, would like an area expanded on, or want to tell me I’m an idiot for doing somethign a certain way, feel free to DM me at X/Twitter. @nyxgeek

Next Up:

https://nyxgeek.wordpress.com/2023/10/05/enumerating-24-million-users-part-2/

Coming Soon:

– Data Analysis